Generative AI, once hailed as a revolutionary technology with vast potential for industries like healthcare, education, and entertainment, has also attracted the attention of cybercriminals. In 2025, the dark side of generative AI has become a serious concern for cybersecurity experts as malicious actors increasingly leverage its capabilities to orchestrate sophisticated cyber threats.

At WaltCorp, we specialize in helping businesses stay ahead of evolving cybersecurity risks. In this article, we’ll explore how cybercriminals are exploiting generative AI, the threats it poses, and how organizations can safeguard themselves against these advanced cyberattacks.

The Rise of Generative AI in Cybercrime

Generative AI refers to machine learning models that can create content, ranging from text and images to audio and code. With tools like OpenAI’s GPT models and deepfake technology, AI can generate highly convincing content that’s indistinguishable from human-created material. While these technologies have many legitimate uses, they’ve also become powerful tools for cybercriminals.

In 2025, the use of generative AI in cybercrime has expanded significantly, as AI models have become more accessible and capable of producing highly realistic attacks. Cybercriminals now use generative AI to automate and scale up their attacks, making them more difficult to detect and counter.

AI-Powered Phishing and Social Engineering

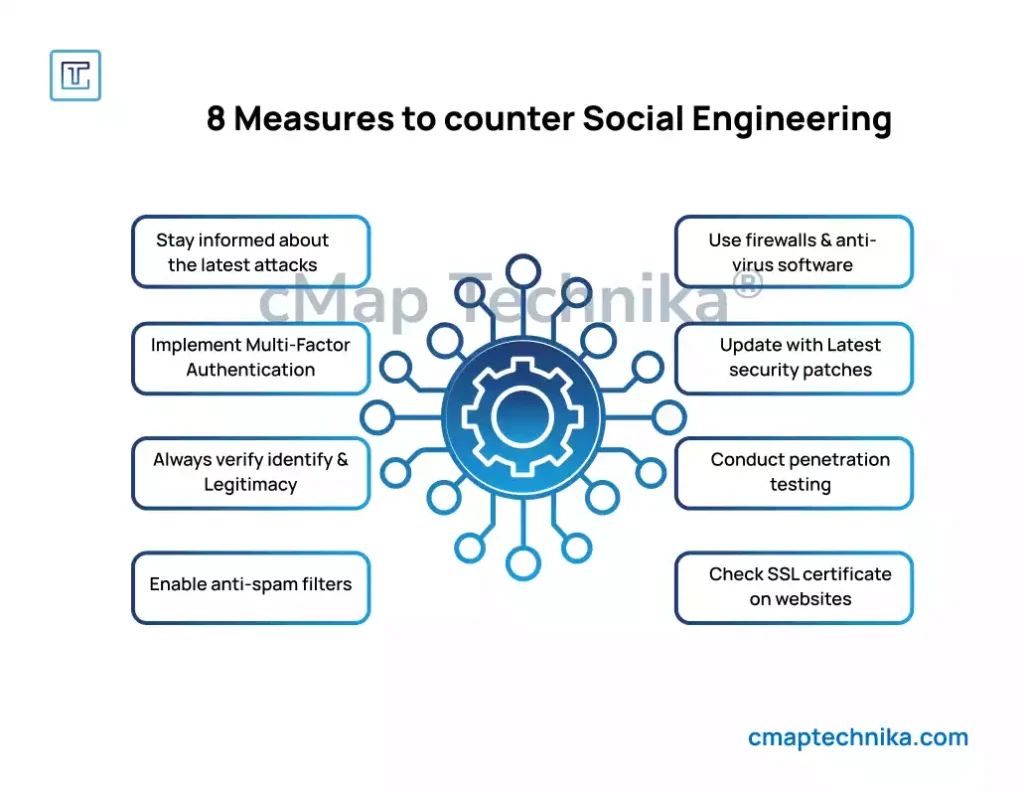

One of the most prevalent ways cybercriminals are exploiting generative AI is in phishing and social engineering attacks. Traditionally, phishing emails or messages were simple and often easy to spot—spelling errors, poor grammar, and generic language were red flags. However, with generative AI, cybercriminals can create highly sophisticated and personalized phishing campaigns that are nearly indistinguishable from legitimate communications.

How It Works: Generative AI can analyze an individual’s online presence, social media profiles, and even company communications to create phishing emails or text messages that closely mimic a familiar sender’s style and tone. By using AI to craft custom messages, cybercriminals can increase the likelihood of a successful attack.

Example: A cybercriminal could use a generative AI model to replicate an executive’s writing style, creating a convincing email asking an employee to transfer funds or disclose sensitive information. These AI-generated attacks can scale quickly, making it harder for traditional defenses like email filters to block them.

Deepfakes and Video-Based Fraud

Deepfake technology, which uses AI to create realistic videos of people saying things they never actually said, has taken a dark turn in the hands of cybercriminals. By combining generative AI with audio and video manipulation tools, attackers can create convincing deepfake videos that appear to come from trusted sources, such as CEOs, government officials, or even family members.

How It Works: Generative AI algorithms analyze existing video and audio samples of individuals to create hyper-realistic simulations. Cybercriminals use these deepfakes for fraud, blackmail, or to manipulate stock prices by impersonating executives in public addresses.

Example: A cybercriminal could create a deepfake video of a CEO announcing a merger or acquisition to influence stock prices, or they could use a fabricated video to extort money from individuals by threatening to release false incriminating footage.

AI-Generated Malware and Ransomware

Generative AI has also found its way into malware development. In the past, creating effective malware required a deep understanding of programming. Today, generative AI is capable of writing sophisticated malware code, reducing the barrier for entry for cybercriminals and allowing them to create more targeted, evasive attacks.

How It Works: With generative AI tools, cybercriminals can develop malware that can adapt and evolve to avoid detection by traditional cybersecurity systems. These AI-generated attacks can automatically modify their behavior to bypass antivirus programs, firewalls, and other defenses, making them much harder to stop.

Example: Ransomware attacks are now being enhanced with generative AI to dynamically create new strains of malware that target specific vulnerabilities in a network. Once deployed, the malware can continuously adapt to evade detection and demand ransom payments.

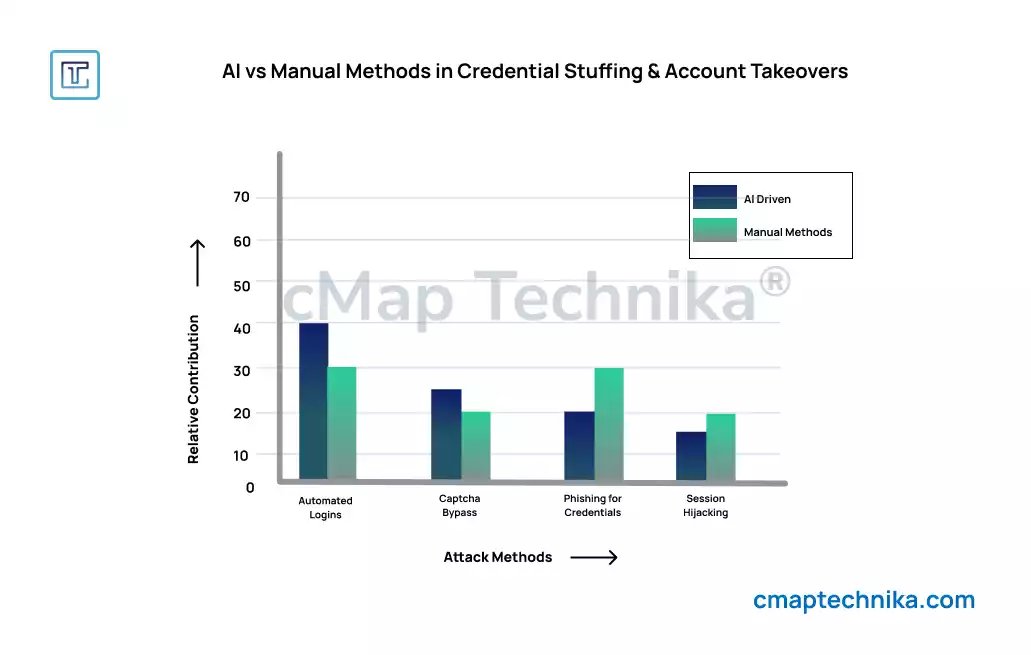

Automated Credential Stuffing and Account Takeovers

Another major concern with generative AI is its ability to automate credential stuffing attacks, where cybercriminals use stolen usernames and passwords to gain unauthorized access to user accounts. Traditionally, these attacks required significant manual effort, but with AI, they can be automated at scale, making them more efficient and harder to defend against.

How It Works: Generative AI can quickly test stolen credentials across a wide range of websites and platforms, simulating human behavior to bypass security systems like CAPTCHA. Once the AI finds valid credentials, attackers can use them for account takeovers, leading to financial fraud, identity theft, or data breaches.

Example: AI-driven credential stuffing tools can automate the process of logging into a user’s banking account or corporate system, gaining access to sensitive information or funds without triggering security alerts.

Countering AI-Driven Cyber Threats

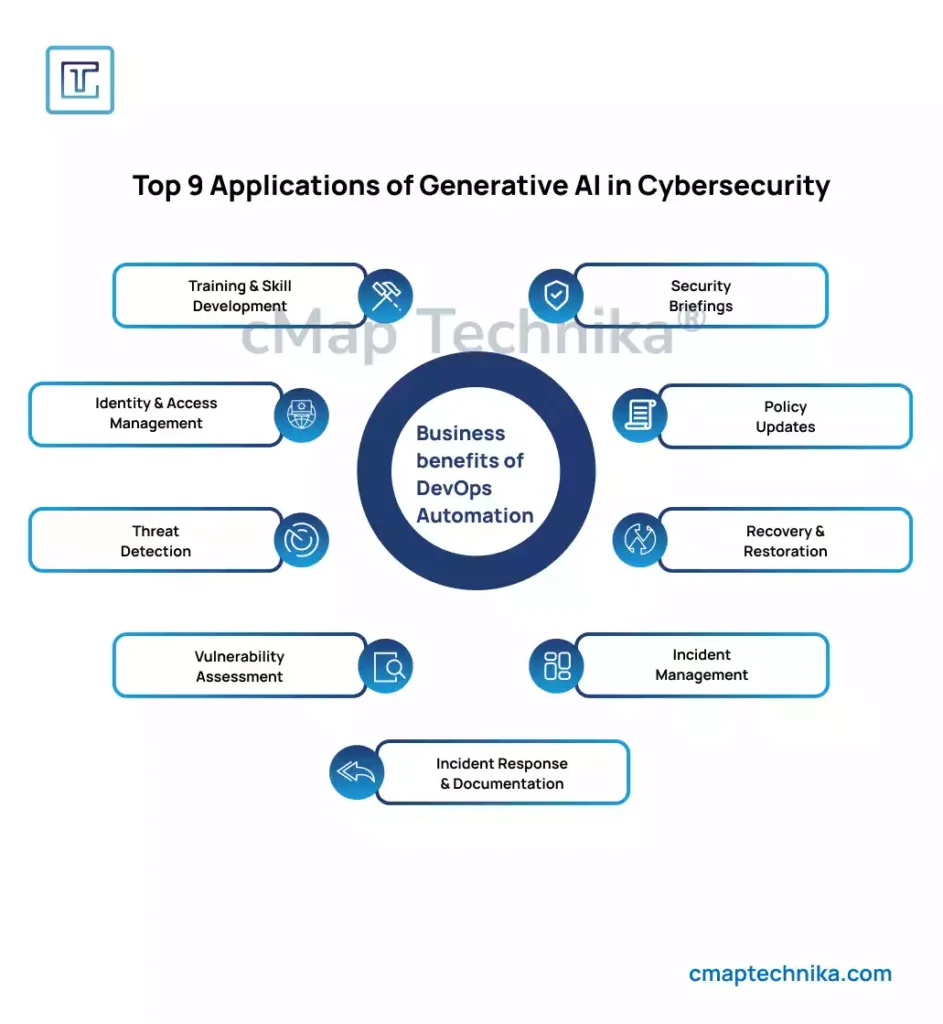

As cybercriminals continue to exploit generative AI for increasingly sophisticated attacks, businesses must step up their cybersecurity efforts to protect against these new threats. Here are some key strategies for countering AI-driven cybercrime:

- AI-Powered Threat Detection: Just as cybercriminals are using AI, so too can cybersecurity experts. AI-powered threat detection systems can analyze patterns and behaviors to identify suspicious activities in real-time, helping businesses catch AI-driven attacks before they cause significant damage.

- Multi-Factor Authentication (MFA): Implementing multi-factor authentication adds an extra layer of security to user accounts, making it harder for attackers to gain unauthorized access, even if they’ve obtained valid credentials through AI-driven methods.

- Employee Training and Awareness: Since social engineering and phishing attacks are the most common use of generative AI in cybercrime, it’s crucial to train employees to recognize suspicious messages and tactics. Regular security awareness training can help reduce the risk of successful phishing attempts.

- Enhanced Data Encryption: Ensuring sensitive data is encrypted both at rest and in transit can help mitigate the impact of AI-powered ransomware and data theft attacks.

- Continuous Monitoring and Incident Response: Businesses must implement continuous monitoring systems to detect AI-driven anomalies and have an incident response plan in place to quickly address and mitigate attacks when they occur.

Conclusion

Generative AI is no longer just a tool for innovation—it’s also a powerful weapon for cybercriminals looking to exploit vulnerabilities in cybersecurity systems. From phishing and deepfakes to AI-generated malware and credential stuffing, the threats posed by generative AI are rapidly evolving. As we move further into 2025, businesses must prioritize AI-driven cybersecurity strategies to protect against these sophisticated, evolving threats.

At WaltCorp, we help businesses stay ahead of the curve by developing advanced cybersecurity solutions tailored to today’s digital threats. By adopting proactive measures and leveraging AI-driven defenses, organizations can better safeguard their data, reputation, and future.